The analysis of covariance (ANCOVA) has notably proven to be an effective tool in a broad range of scientific applications. Despite the well-documented literature about its principal uses and statistical properties, the corresponding power analysis for the general linear hypothesis tests of treatment differences remains a less discussed issue. The frequently recommended procedure is a direct application of the ANOVA formula in combination with a reduced degrees of freedom and a correlation-adjusted variance. This article aims to explicate the conceptual problems and practical limitations of the common method. An exact approach is proposed for power and sample size calculations in ANCOVA with random assignment and multinormal covariates. Both theoretical examination and numerical simulation are presented to justify the advantages of the suggested technique over the current formula. The improved solution is illustrated with an example regarding the comparative effectiveness of interventions. In order to facilitate the application of the described power and sample size calculations, accompanying computer programs are also presented.

Avoid common mistakes on your manuscript.

The analysis of covariance (ANCOVA) was originally developed by Fisher (1932) to reduce error variance in experimental studies. Its essential nature and principal use were well explicated by Cochran (1957) and subsequent articles in the same issue of Biometrics. The value and use of ANCOVA have also received considerable attention in social science, for example, see Elashoff (1969), Keselman et al. (1998), and Porter and Raudenbush (1987). Comprehensive introduction and fundamental principles can be found in the excellent texts of Fleiss (2011), Huitema (2011), Keppel and Wickens (2004), Maxwell and Delaney (2004), and Rutherford (2011). It is essential to note that ANCOVA provides a useful approach for combining the advantages of two highly acclaimed procedures of analysis of variance (ANOVA) and multiple linear regression. The extensive literature shows that it is one of the major methods of statistical analysis in applied research across many scientific fields.

The importance and implications of statistical power analysis in scientific research are well demonstrated in Cohen (1988), Kraemer and Blasey (2015), Murphy et al. (2014), and Ryan (2013), among others. Accordingly, it is of great practical value to develop theoretically sound and numerically accurate power and sample size procedures for detecting treatment differences within the context of ANCOVA. There are numerous published sources that address statistical theory and applications of power analysis for ANOVA and multiple linear regression. Specifically, various algorithms and tables for power and sample size calculations in ANOVA have been presented in the classic sources of Bratcher et al. (1970), Pearson and Hartley (1951), Scheffe (1961), and Tiku (1967, 1972). The corresponding results for multiple regression and correlation, especially the distinct notion of fixed and random regression settings, were given in Gatsonis and Sampson (1989), Mendoza and Stafford (2001), Sampson (1974), and Shieh (2006, 2007). However, relatively little research has attempted to address the corresponding issues for ANCOVA.

This lack of further discussion can partly be attributed to the simple framework and conceptual modification of Cohen (1988) on the use of ANOVA method for power evaluation in ANCOVA research. It is argued that the ANCOVA of original responses is essentially the ANOVA of the regression-adjusted or statistically controlled measurements obtained from the linear regression of unadjusted responses on the covariates that is common to all treatment groups. However, some modifications are required to account for the number of covariate variables and the strength of correlation between the response and covariate variables. Accordingly, both the error degrees of freedom and variance component are reduced. Then, the power and sample size computations in ANCOVA proceed in exactly the same way as in analogous ANOVA designs. The methodology of Cohen (1988) has become common practice for power analysis in ANCOVA settings as repeatedly demonstrated in Huitema (2011), Keppel and Wickens (2004), Levin (1997), Maxwell and Delaney (2004), and Yang et al. (1996).

It is well known that the ANOVA adopts the fundamental assumptions of independence, normality, and constant variance. The corresponding hypothesis testing and theoretical considerations are valid only if these assumptions are satisfied. The consequences of violations of independence assumption in ANOVA have been reported in Kenny and Judd (1986), Pavur and Nath (1984), and Scariano and Davenport (1987), among others. An essential assumption underlying ANCOVA is the regression coefficients associating the response variable with the covariate variables are the same for each treatment group. Therefore, the regression adjustment in Cohen’s (1988, pp. 379–380) covariance framework includes the common regression coefficient estimates derived from the multiple regression between the response and covariate variables across all treatment groups. Unlike the original responses, the adjusted responses are generally correlated and thus violate the independence of observations assumption for ANOVA. Therefore, Cohen’s (1988) procedure is intrinsically inexact, even with the technical considerations of a deflated degrees of freedom and a correlation-adjusted variance. Consequently, this prevailing method only provides approximate power and sample size calculations in ANCOVA designs. It should be stressed that no research to date has acknowledged this crucial problem and the result has most likely been interpreted as an exact solution.

Toward the goal of choosing the most appropriate methodology for ANCOVA studies, the present article focuses on the Wald tests for the general linear hypothesis of treatment effects. Under the two different assumptions of a priori specified covariate values and multinormal distributed covariate variables, the exact power functions of the Wald statistic are derived. The analytic derivations for a general linear hypothesis require the involved operations of matrix algebra and sophisticated evaluations of matrix t variables that have not been reported elsewhere. Detailed numerical investigations were conducted to evaluate the existing formulas for power and sample size computations under a wide range of model settings, including non-normal covariate variables. According to the analytic justification and empirical assessment, the suggested approach has a decisive advantage over the conventional method. An applied example regarding the comparative effectiveness of interventions is presented to illustrate the distinct features and practical usefulness of the proposed techniques. Computer codes are also presented to implement the recommended power calculation and sample size determination in planning ANCOVA studies.

A one-way fixed-effects ANCOVA model with multiple covariates can be expressed as

$$\beginwhere \(Y_\) is the score of the jth subject in the ith treatment group on the response variable, \(\upmu _\) is the ith group intercept, \(X_\) is the score of the jth subject in the ith treatment group on the kth covariate, \(\upbeta _\) is the slope coefficient of the kth covariate, and \(\upvarepsilon _\) is the independent \(N(0, \upsigma ^)\) error with \(i = 1,\ldots , G\, (\ge 2), j = 1, \ldots , N_\) , and \(k = 1, \ldots , P\, (\ge 1)\) . The least-square estimator for the ith intercept \(\upmu _\) is given by

$$\beginfor \(i \ne i^\prime \) , i and \(i^\prime = 1,\ldots , G\) . Because the covariances between regression-adjusted estimators \(\<<\hat<\upmu >>_, <\ldots >, <\hat<\upmu >>_\>\) are generally not zero, they should not be treated as independent variables. For notational simplicity, the prescribed properties are expressed in matrix form:

$$\beginThe adjusted group means are the expected group responses evaluated at the grand covariate means:

$$\beginwhere \(\bar_=\mathop \sum \limits _^\mathop \sum \limits _^ X_/N_\) , \(k = 1,\ldots , P, \) and \(N_=\mathop \sum \limits _^G N_\) . A natural and unbiased estimator of the adjusted group mean \(\upmu _i^*\) is

$$\beginThen, the least-squares estimators \(<\hat<\upmu >>_i^*\) of the adjusted group means \(\upmu _i^*\) have the following distributions:

$$\beginwhere \(\mathbf=\mathop \sum \limits _^G \mathop \sum \limits _^ \mathbf_/N_ = (\bar_, <\ldots >, \bar_)^<\mathrm>\) for \(i \ne i^\prime \) , i and \(i^\prime = 1,\ldots , G\) . The vector of adjusted group mean estimators \(\hat<\varvec<\upmu >>^* = (<\hat<\upmu >>_1^, <\ldots >, <\hat<\upmu >>_G^*)^<\mathrm>\) has the distribution

$$\beginTo test the general linear hypothesis about treatment effects or adjusted mean effects in terms of

$$\beginwhere C is a \(c \times G\) contrast matrix of full row rank and \(\mathbf_\) is a \(c \times 1\) null column vector, the Wald test statistic is of the form

$$\beginwhere \(<\hat<\upsigma >>^ = /\upnu \) , \( = \mathop \sum \limits _^G \mathop \sum \limits _^ (Y_ - \bar_)^ - \mathbf_^<\mathrm> \mathbf_^ \mathbf_\) , and \(\upnu =N_ - G - P\) . Note that the contrast matrix is confined to satisfy \(\mathbf_ = \mathbf_\) . Hence, the general linear hypothesis of \(\hbox _\, \mathbf\upmu ^* = \mathbf_\) versus \(\hbox _ \,\mathbf\upmu ^* \ne \mathbf_\) is equivalent to

$$\beginAlso, the Wald test statistic can be rewritten as

$$\beginThe Wald-type test has great practical and pedagogical appeal than the test procedure under the full-reduced-model formulation. Because of its simplicity and generality, the associated properties are derived and presented in the subsequent illustration. Under the null hypothesis with \(\mathbf<\varvec<\upmu >> = \mathbf_\) , the test statistic \(W^*\) has an F distribution

$$\beginwhere \(F(c, \upnu )\) is an F distribution with c and \(\upnu \) degrees of freedom, \(\upnu =N_ - G - P\) , and \(N_=\mathop \sum \limits _^G N_\) . Hence, \(H_\) is rejected at the significance level \(\upalpha \) if \(W^* > F_

where \(F(c, \upnu , \Lambda )\) is a non-central F distribution with c and \(\upnu \) degrees of freedom and non-centrality parameter

$$\beginThe associated power function of the general linear hypothesis is readily obtained as

$$\beginThe prescribed statistical inferences about the general linear hypothesis are based on the conditional distribution of the covariate outcomes. As noted in Gatsonis and Sampson (1989), Mendoza and Stafford (2001), and Sampson (1974), the actual values of covariates cannot be known in advance just as the primary responses. It is vital to treat the covariates as random variables and to derive the distribution of the test statistic over possible values of the covariate variables. Moreover, Elashoff (1969) and Harwell (2003) emphasized that the statistical assumptions underlying the ANCOVA include the random assignment of subjects to treatments and the covariate variables are independent of the treatment effects. Moreover, the normal covariate setting is commonly employed to provide a fundamental framework for analytical derivation and theoretical discussion in ANCOVA studies as in Elashoff (1969) and Harwell (2003). Thus, it is constructive to assume the covariates have independent and identical normal distribution

where \(<<\varvec<\uptheta >>>\) is a \(P \times 1\) vector and \(>>\) is a \(P \times P\) positive-definite variance–covariance matrix for \(i = 1,\ldots , G\) , and \(j = 1, <\ldots >, N_\) .

Under the multinormal distribution of \(\<\mathbf

where \(\mathbf_\) is an identity matrix of dimension c. Accordingly, both \(\mathbf = \<\mathbf_ + \mathbf^<\mathrm>\>^<> \mathbf\) and \(\mathbf^* = \mathbf<\varvec<\upxi >>\) have an inverted matrix variate t-distribution (Gupta and Nagar 1999, Section 4.4):

Following these results, standard matrix algebra shows that non-centrality parameter \(\Lambda \) defined in Equation 15 has the alternative form

$$\beginwhere \(B^* = (1 - A^*) \sim \hbox \<(\upnu + 1)/2, P/2\>\) . In connection with the effect size measures in ANOVA, the first component \(>\) in \(\Lambda \) is rewritten as

$$\beginwhere \(\upgamma ^=\upsigma _<\upgamma >^ /\upsigma ^\) , \(\upsigma _<\upgamma >^ = (\mathbf<\varvec<\upmu >>)^<\mathrm>(\mathbf^<\mathrm>)^(\mathbf<\varvec<\upmu >>)\) , \(\mathbf = \hbox (1/q_, <\ldots >, 1/q_)\) , \(q_=N_/N_\) for \(i = 1,\ldots , G\) . Consequently, the non-centrality term \(\Lambda \) has a useful formulation

$$\beginIt should be pointed out that Gupta and Nagar (1999) only provides the generic definition and analytic properties of an inverted matrix variate t-distribution. Their results are applied and extended here to the context of ANCOVA. Accordingly, under the random covariate modeling framework, the \(W^*\) statistic has the two-stage distribution

$$\beginThe exact power function can be formulated as

$$\beginwhere the expectation \(E_\) is taken with respect to the distribution of \(B^*\) .

Notably, the omnibus test of the equality of treatment effects is a special case of the general linear hypothesis by specifying the contrast matrix as \(\mathbf_<\varvec<\upmu >> = \mathbf_<(G - 1)>\) where

$$\beginis a \((G - 1) \times G\) contrast matrix of full row rank. The component \(\upgamma ^\) in the non-centrality term \(\Lambda \) is simplified as

$$\beginwhere \(\upsigma _<\updelta >^ =\sum _^G q_(\upmu _ - \tilde<\upmu >)^\) and \(\tilde<\upmu >=\sum _^G q_\upmu _\) . The corresponding non-central component \(\Lambda \) is expressed as

$$\beginThe power function of the omnibus F test of treatment differences is simplified as

$$\beginNote that \(\upsigma _<\updelta >^ \) reduces to the form \(\upsigma _<\updelta >^ =\mathop \sum \limits _^G (\upmu _ - \bar<\upmu >)^/G\) with \(\bar<\upmu >=\mathop \sum \limits _^G \upmu _/G\) when \(q_ = 1/G\) for all \(i = 1, <\ldots >, G\) . Hence, \(\updelta ^\) has the same form as the signal to noise ratio \(f^\) in ANOVA (Fleishman 1980) for balanced designs. Although the prescribed application of general linear hypothesis is discussed only from the perspective of a one-way ANCOVA design, the number of groups G may also represent the total number of combined factor levels of a multi-factor ANCOVA design. Hence, using a contrast matrix associated with a specific designated hypothesis, the same concept and process of assessing treatment effects can be readily extended to two-way and higher-order ANCOVA designs.

It is essential to note that the power function \(\Psi _\) depends on the group intercepts \(\< \upmu _,\ldots , \upmu _\>\) and variance component \(\upsigma ^\) through the non-centrality \(\Lambda \) or the effect size \(\upgamma ^\) , but not the covariate coefficients \(\< \upbeta _, \ldots , \upbeta _\>\) . Also, under the prescribed stochastic assumptions for the covariate variables, the multivariate normal distribution leads to the unique conditional property on a beta distribution in the general distribution of the test statistic \(W^*\) . Due to the fundamental property of the contrast matrix, the resulting distribution and power function do not depend on the mean vector \(<\varvec<\uptheta >> \) and variance–covariance matrix \(> \) of the multinormal covariate distribution. To determine sample sizes in planning research designs, the power functions \(\Psi _\) can be applied to calculate the sample sizes \(\< N_>,\ldots , N_\>\) needed to attain the specified power \(1 - \upbeta \) for the chosen significance level \(\upalpha \) , contrast matrix C, intercept parameters \(\< \upmu _,\ldots , \upmu _\>\) , variance component \(\upsigma ^\) , and the number of covariates P.

For an ANCOVA design with a priori designated sample size ratios \(\,\ldots , r_\>\) with \(r_ = N_/N_\) for \(i = 1, \ldots , G\) . The required computation is simplified to deciding the minimum sample sizes \(N_>\) (with \(N_ = N_>\cdot r_, i = 2,\ldots , G)\) required to achieve the selected power level with the power functions \(\Psi _\) . Using the embedded functions in popular software systems, optimal sample sizes can be readily computed through an iterative process. The SAS/IML (SAS Institute 2017) and R (R Development Core Team 2017) programs employed to perform the suggested power and sample size calculations are available as supplementary material. The proposed power and sample size procedures for the general linear hypothesis tests of ANCOVA subsume the results in Shieh (2017) for a single contrast test as a special case. Notably, the derivations and manipulations of an inverted matrix variate t are more involved than that of a Hotelling’s \(T^\) distribution as demonstrated in Shieh (2017).

Alternatively, a simple procedure for the comparison of treatment effects has been described in Cohen (1988, pp. 379–380). Unlike the proposed two-stage distribution, it is suggested that \(W^*\) has a simplified F distribution

$$\beginIt is easily seen from the model assumption given in Equation 1 that \(\upsigma _Y^2 = \hbox (Y_) =<\varvec<\upbeta >>^<\mathrm

To further demonstrate the contrasting features and practical consequences of the proposed approach and existing methods, detailed empirical appraisals are conducted to examine their performance in power and sample size calculations. For ease of comparison, the numerical illustration considered in Maxwell and Delaney (2004, pp. 441-443) for sample size planning and power analysis is utilized as the fundamental framework.

In particular, Maxwell and Delaney (2004) described an ANOVA design with \(G = 3\) , group intercepts \(\< \upmu _, \upmu _, \upmu _\>=\< 400, 450, 500\>\) , and error variance \(\upsigma _Y^2 = 10,000\) . Then, an ANCOVA model is introduced with the inclusion of an influential covariate variable X with \(\uprho = (X, Y) = 0.5\) to partially account for the variance in the response variable Y. The corresponding unexplained error variance \(\upsigma ^\) in ANCOVA is reduced as \(\upsigma ^= (1 - \uprho ^)\upsigma _Y^2 = 7,500\) . To detect the treatment differences, they showed that the total sample sizes required to have a nominal power of 0.80 are 63 and 48 for the balanced ANOVA and ANCOVA designs, respectively. Thus, the ANCOVA design has the potential benefits to attain the same power with nearly 25% fewer subjects than an ANOVA. It should be noted that the power formulas \(\Psi _\) and \(\Psi _\) given in Equations 31 and 32, respectively, were applied for sample size calculations in Maxwell and Delaney (2004). To show a profound implication of the sample size procedures, extensive simulation study was performed under a wide range of model configurations.

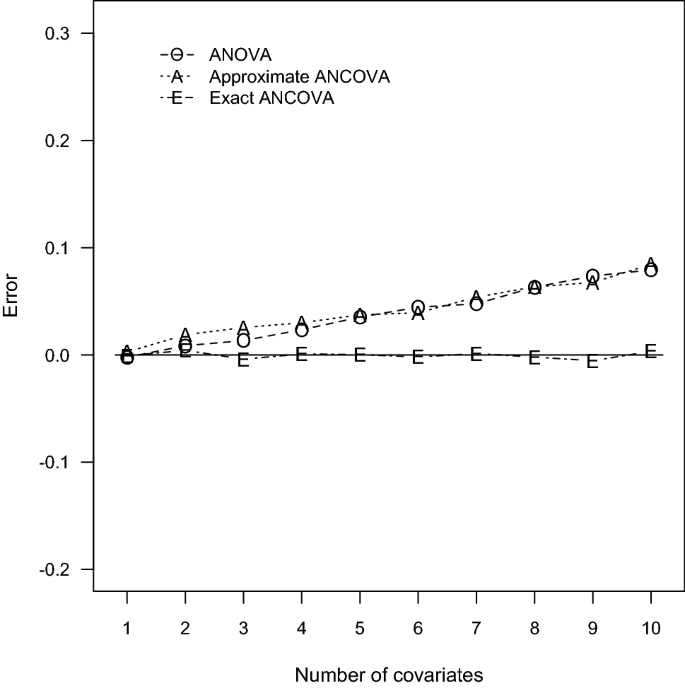

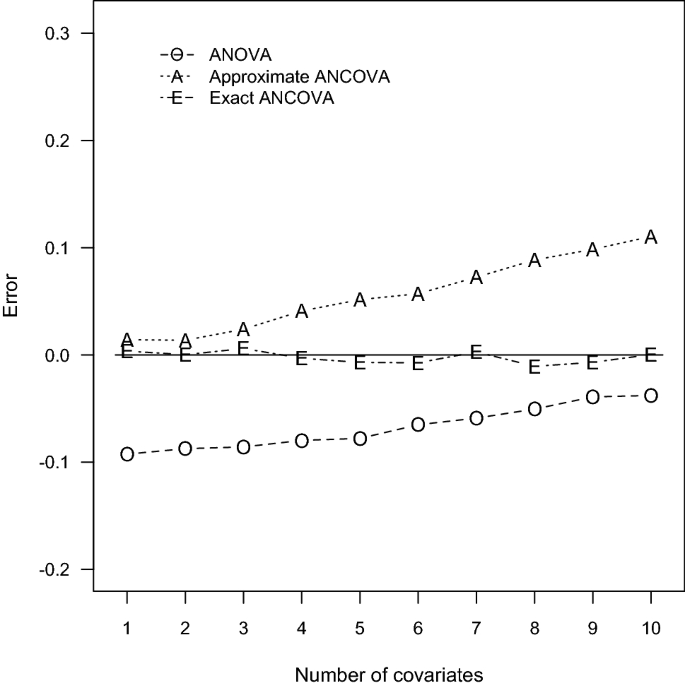

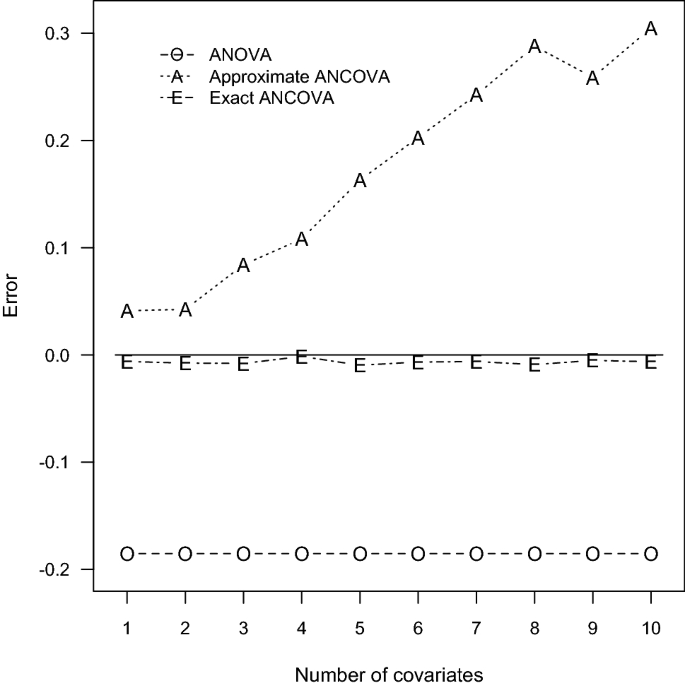

According to the power comparisons, the ANOVA method generally does not provide accurate sample size calculations for an ANCOVA design. Unsurprisingly, the only exceptions occurred when the number of covariates is small and the correlation between the covariates and the response variable is close to zero as in Tables 1 and 4. The approximate ANCOVA method consistently gives larger power estimate than the simulated power for all cases considered here. The discrepancy noticeably increases with the number of covariates and the magnitude of effect size. The resulting errors can be as large as 0.0845, 0.1105, and 0.3049 associated with the scenarios of \(P = 10\) in Tables 1, 2 and 3, respectively. For the relative smaller effect sizes in Tables 4, 5 and 6, the performance of the approximate ANCOVA formula has improved with the errors of 0.0506, 0.0628, and 0.2710 for the cases of \(P= 10\) . Consequently, the overestimation problem of the power function \(\Psi _\) suggests that the computed sample sizes are generally inadequate to achieve the designated power level.

Regarding the accuracy of the proposed exact ANCOVA approach, the corresponding results in Tables 1, 2, 3, 4, 5 and 6 show that the differences between the estimated and simulated powers are fairly small. The largest absolute error is 0.0106 for the two cases of \(P = 8\) and 5 in Tables 2 and 5, respectively. All the other 58 cases in Tables 1, 2, 3, 4, 5 and 6 have an absolute error less than 0.01. These numerical results imply that the proposed exact approach outperforms the ANOVA method and the approximate ANCOVA procedure for all design configurations considered here. Therefore, the suggested power and sample size calculations can be recommended for general use.

A documented example of Maxwell and Delaney (2004) is presented and extended next to demonstrate the usefulness of the suggested power and sample size procedures and accompanying software programs for the omnibus test of treatment effects in ANCOVA designs.

Specifically, Maxwell and Delaney (2004, Table 9.7, p. 429) provided the data for assessing the effectiveness of different interventions for depression. There are 10 participants with random assignment in each of the three intervention groups of (1) selective serotonin reuptake inhibitor (SSRI) antidepressant medication, (2) placebo, or (3) wait list control. The measurements are the pretest and posttest Beck Depression Inventory (BDI) scores of depressive individuals. The primary interest of the ANCOVA study is on the group differences of posttest BDI measurements using the pretest BDI scores as covariates. The results show that the estimates of adjusted group means and error variance are \(\<<\hat<\upmu >>_1^*,<\hat<\upmu >>_2^*,<\hat<\upmu >>_3^*\>=\< 7.5366, 11.9849, 13.9785\>\) and \(<\hat<\upsigma >>^2 = 29.0898\) , respectively. The omnibus F test statistic of treatment differences is \(W^* = 3.73\) , which yields a p-value of 0.0376. Therefore, the test result suggests that the intervention effects are significantly different at \(\upalpha = 0.05\) . Although this is not the focus in the illustration of Maxwell and Delaney (2004), it can be computed from an ANOVA of posttest scores that the variance estimate is \(<\hat<\upsigma >>_Y^2= 39.6185\) . Hence, the sample squared correlation between the posttest and pretest BDI scores is \(<\hat<\uprho >>^2 = 1 -<\hat<\upsigma >>^2<\hat<\upsigma >>_Y^2 = 1 - 29.0898/39.6185 = 0.2658\) . The observed value of the ANOVA F test of group differences is \(F^* = 3.03\) with a p-value of 0.0647. At the significance level 0.05, the omnibus test of no intervention group difference on the posttest BDI scores cannot be rejected. Although null hypothesis significance testing is useful in various applications, it is important to consult the recent articles of Wasserstein and Lazar (2016) and Wasserstein et al. (2019) for the recommended principles underlying proper use and interpretation of statistical significance and p-values.

In view of the prospective nature of advance research planning, the general guidelines suggest published findings or expert opinions can offer reliable information for the vital characteristics of future study. Accordingly, it is prudent to adopt a minimal meaningful effect size in order to enhance the generalizability of the result and the accumulation of scientific knowledge. For illustration, the prescribed summary statistics of the three-group depression intervention study are employed as population adjusted mean effects and variance component. The suggested power procedure shows that the resulting power for the omnibus test of group differences is \(\Psi _ = 0.6145\) when the significance level \(\upalpha \) equals to 0.05. Because the computed power is substantially smaller than the common levels of 0.80 or 0.90, this implies that the group sample size \(N = 10\) does not provide a decent chance of detecting the potential differences between treatment groups. To determine the proper sample size, the proposed sample size computations showed that the balanced group sample sizes of 15 and 19 are required to attain the nominal power of 0.8 and 0.9, respectively. The total sample sizes \(N_ = 45\) and 57 are substantially larger than 30 of the exemplifying design. Essentially, it requires 50% and 90% increases of the sample size to meet the common power levels of 0.80 and 0.90, respectively. These design configurations are presented in the user specifications of the SAS/IML and R programs presented in the supplemental programs. Researchers can easily identify these statements and then modify the input values in the computer code to incorporate their own model characteristics.

ANCOVA provides a useful approach for combining the advantages of two widely established procedures of ANOVA and multiple linear regression. Despite the close resemblance among the three types of statistical analyses, their power computation and sample size determination are still theoretically distinct when the stochastic properties of the continuous covariates or predictors are taken into account. It is generally recognized that the use of ANCOVA may considerably reduce the number of subjects required than an ANOVA design to attain the required precision and power. For planning and evaluating randomized ANCOVA designs, an ANOVA-based sample size formula has been proposed in Cohen (1988) to accommodate the reduced error variance and degrees of freedom because of the use of effective and influential covariates. The procedure is very appealing from a computational standpoint and has been implemented in some statistical packages. However, no further analytical discussion and numerical evaluation are available to validate the appropriateness and implications of Cohen’s (1988) method in the literature.

This article aims to address the potential limitation and approximate nature of the prevailing method and to describe an alternative and exact approach for power and sample size calculations in ANCOVA designs. It is demonstrated both theoretically and empirically that the seemly exact technique of Cohen (1988) does not involve all of the covariate properties in ANCOVA. Exact power and sample size procedures are described for the general linear hypothesis tests of treatment effects under the assumption that the covariate variables have a joint multinormal distribution. The simulation results reveal that the proposed technique is superior to the current method under a wide range of ANCOVA designs. More importantly, additional numerical assessments show that the suggested power function and sample size procedure preserve reasonably good performance under various non-normal situations, such as exponential, Gamma, Laplace, Log normal, uniform, and discrete uniform distributions. Hence, the proposed two-stage distribution and power function of the Wald statistic for the general linear hypothesis tests possess desirable robust properties and are also applicable to other continuous covariate distributions in various ANCOVA designs. Consequently, the presented methodology expands the power assessment and sample size determination of Shieh (2017) for contrast analysis in ANCOVA. To enhance the practical values, computer algorithms are also provided to facilitate the recommended power calculations and sample size determinations. With respect to the importance and implementation of random sampling, the fundamental and standard sampling designs and estimation methods can be found in Thompson (2012). Heterogeneity of variance is one of the unique and problematic factors known as detrimental to the statistical inferences in ANCOVA (Harwell 2003; Rheinheimer and Penfield 2011). A potential topic for future study is to develop proper power and sample size procedures within the variance heterogeneity framework.

The author is grateful to the Associate Editor and referees for their valuable comments and suggestions which greatly improved the presentation and content of the article.